Research network to focus on AI, integrated circuits

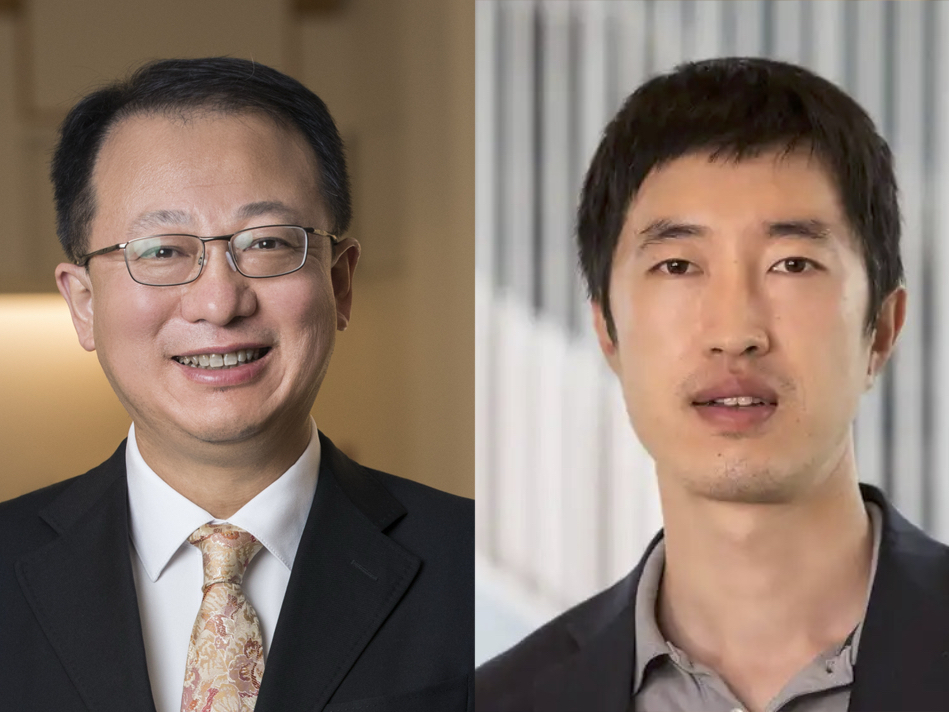

Shantanu Chakrabartty to lead Neuromorphic Integrated Circuits Education network

With the increased demand for efficient hardware for artificial intelligence and integrated circuits comes a need to educate students and researchers on how to design and create these tools.

To address this need, Shantanu Chakrabartty, the Clifford W. Murphy Professor and vice dean for research and graduate education in the McKelvey School of Engineering, is leading a Neuromorphic Integrated Circuits Education (NICE) research coordination network (RCN) with a three-year, $900,000 grant from the National Science Foundation. His co-investigators are Andreas Andreou, professor of electrical and computer engineering at Johns Hopkins University Whiting School of Engineering, and Jason Eshraghian, assistant professor of electrical and computer engineering at the University of California, Santa Cruz.

Neuromorphic engineering, which is inspired by the function of the human brain, has been growing in the past several decades with the increased demand for AI and integrated circuits. By looking at the way the brain is structured and its neural mechanisms, researchers will develop hardware and algorithms that make the most of computational performance with the least amount of power.

The NICE network will allow researchers to acquire design skills that can be applied to integrated circuits design, helping to meet the education and workforce gap in integrated circuits design and fabrication. The project will use the infrastructure of the annual Telluride Neuromorphic Cognition Engineering Workshop to organize discussion groups and hands-on training events and create research cohorts around neuromorphic integrated circuits.

“We are using the extensive expertise in the network to investigate novel neuromorphic architectures, circuits and hardware that could lead to significant performance advantages when compared with other computing architectures that use central processing units, graphical processing units or quantum processors,” Chakrabartty said.

For example, an insect brain can learn new tasks and continuously adapt over its lifetime with a power budget that is smaller than that of a quartz wristwatch.

“Nothing comparable is remotely achievable by current state-of-the-art AI technology,” Chakrabartty said. “Thus, addressing this performance gap will pave the way for designing tiny AI systems that are constrained by size, weight and power.”

The RCN has three subnetworks, including one that will focus on developing behavior models and software; one that will focus on designing neuromorphic process design kits; and one that will establish a testing infrastructure and create benchmarks to evaluate neuromorphic integrated circuit performance.

Other collaborating institutions are Georgia Tech, Yale University, Oklahoma State University and the University of California San Diego.