AI-based algorithms need to be evaluated for their clinical applications

Abhinav Jha-led research focuses on developing algorithms to evaluate nuclear medicine images for clinical applications, such as cancer diagnosis and treatment

Artificial intelligence has quickly become a powerful tool in patient care and medical diagnostics. One of the most explored and most used applications of AI has been in medical image segmentation, where the AI tool is used to delineate regions of interest in patient scans. However, a new study reveals that the metrics used to evaluate how well AI algorithms perform in medical image segmentation tasks don’t always align with real-world clinical applications.

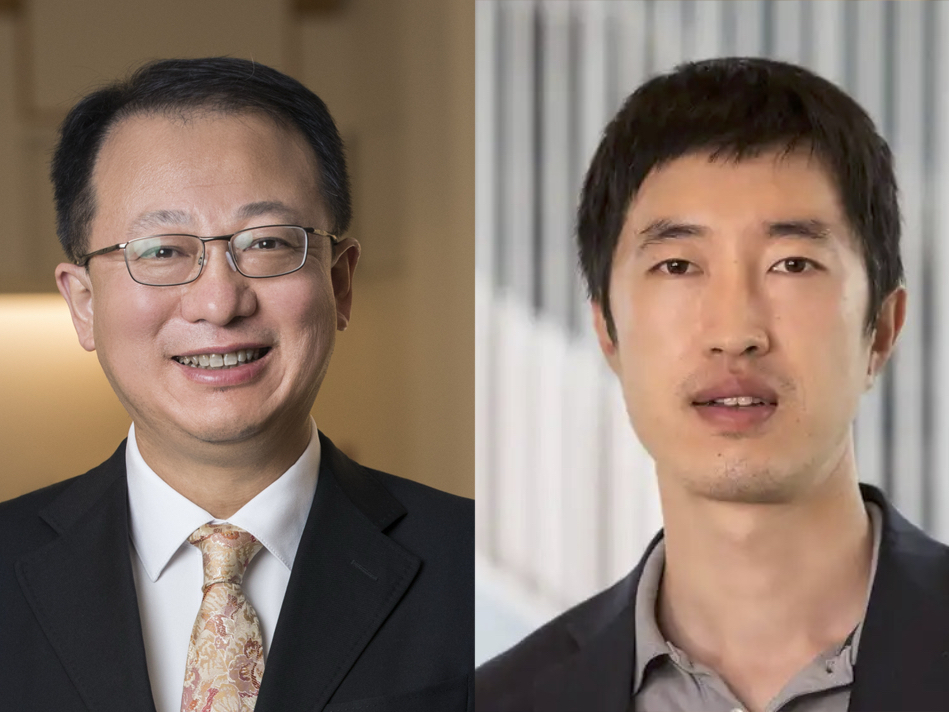

In a study published March 1 in the Journal of Nuclear Medicine, Abhinav Jha, assistant professor of biomedical engineering at the McKelvey School of Engineering and of radiology at the Mallinckrodt Institute of Radiology at the School of Medicine, both at Washington University in St. Louis, examined positron emission tomography (PET) segmentation algorithms.

Traditionally, these algorithms are evaluated using metrics that are task agnostic, or not explicitly designed to correlate with clinical tasks. Jha’s study, based on a retrospective analysis of multi-center clinical trial data, assessed how well these task-agnostic metrics aligned with an algorithm’s performance on clinically relevant tasks.

“Image segmentation is a burdensome task,” Jha explained. “It requires drawing a 3D boundary around an object of interest – such as a tumor – in an image with a lot of variability in quality, resolution and other factors that make accurate segmentation difficult. AI algorithms have the potential to do this task accurately, precisely, quickly and cheaply, reducing the burden on doctors. These algorithms are already being used in clinical settings now, but every algorithm can give different boundaries, so how do we know which is most accurate?”

Jha and his collaborators, Joyce Mhlanga, MD, associate professor of radiology, and Barry Siegel, MD, professor of radiology and medicine, both at WashU’s School of Medicine, compared traditional metrics used to evaluate segmentation algorithms, which generally considered the volume overlap and shape of the segmentation, with metrics focused on quantifying how large and metabolically active a tumor is. They asked, does the evaluation of segmentation algorithms using task-agnostic metrics correlate with performance that quantify performance on clinically relevant tasks?

The team analyzed clinical trial data on lung cancer from multiple centers using multiple machine-learning methodologies. Further, they evaluated different configurations of a common deep-learning algorithm (U-net) and reached the same inference.

“We observed that task-agnostic metrics did not correlate with metrics we care about when looking at performance on clinical tasks,” Jha said. “This underscores the need for an objective, task-based evaluation of image-segmentation algorithms and the importance of aligning algorithmic performance metrics with real-world clinical applications.”

A preliminary version of this work was a finalist for the Robert F. Wagner Best Student Paper award at the 2023 SPIE Medical Imaging Conference. Jha also led a multi-institutional, multi-agency team tasked with developing a framework for evaluating AI-based medical imaging methods. Further, he led the Evaluation Team of the Society of Nuclear Medicine and Molecular Imaging (SNMMI)’s AI taskforce. This team proposed the Recommendations for Evaluation of AI for Nuclear Medicine (RELAINCE) guidelines, which were released in 2022 and align with this latest research.

Liu Z, Mhlanga JC, Xia H, Siegel BA, Jha A. Need for objective task-based evaluation of image-segmentation algorithms for quantitative PET: A study with ACRIN 6668/RTOG 0235 multi-center clinical trial data. Journal of Nuclear Medicine, March 1, 2024. DOI: https://doi.org/10.2967/jnumed.123.266018

This work was supported by the National Institutes of Health’s National Institute of Biomedical Imaging and Bioengineering (R01-EB031051, R01-EB031962, R56-EB028287, and R21-EB024647 Trailblazer Award).