Deep learning models can be trained with limited data

Ulugbek Kamilov, graduate students, develop method that could reduce errors in computational imaging

Deep learning models, such as those used in medical imaging to help detect disease or abnormalities, must be trained with a lot of data. However, often there isn’t enough data available to train these models, or the data is too diverse.

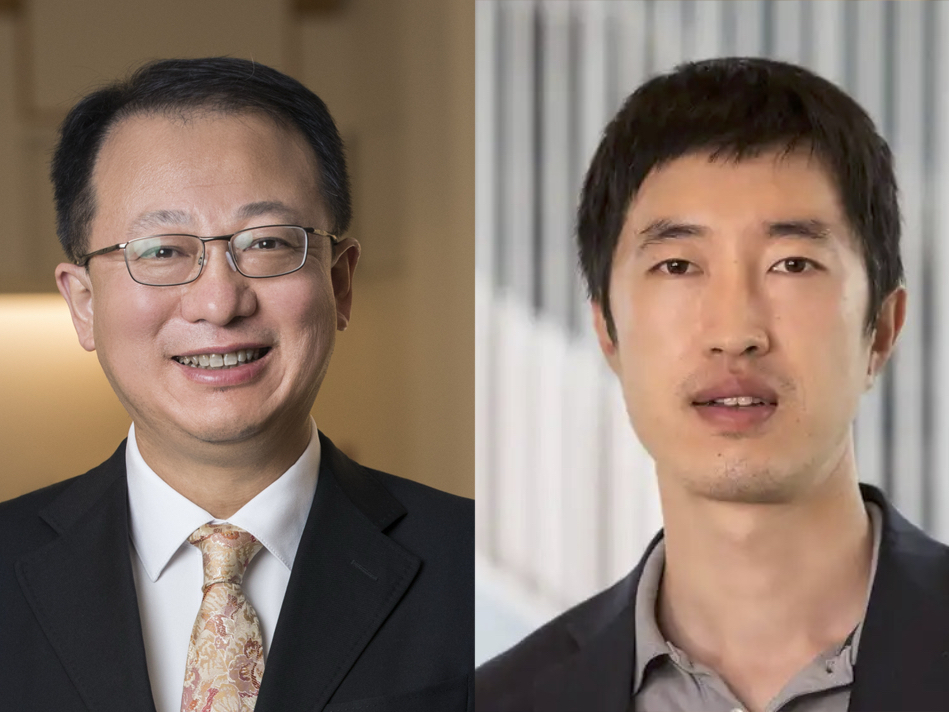

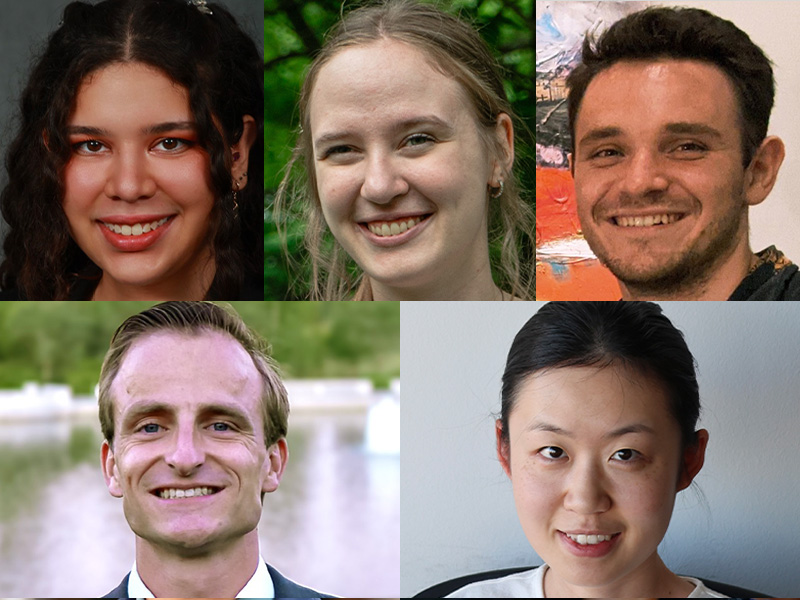

Ulugbek Kamilov, associate professor of computer science & engineering and of electrical & systems engineering in the McKelvey School of Engineering at Washington University in St. Louis, along with Shirin Shoushtari, Jiaming Liu and Edward Chandler, doctoral students in his group, have developed a method to get around this common problem in image reconstruction. The team will present the results of the research this month at the International Conference on Machine Learning in Vienna, Austria.

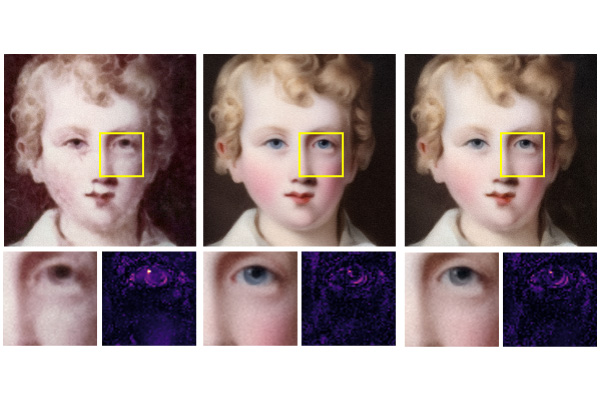

For example, MRI data used to train deep learning models could come from different vendors, hospitals, machines, patients or body parts imaged. A model trained on one type of data could introduce errors when applied on other data. To avoid those errors, the team adopted the widely used deep learning approach known as Plug-and-Play Priors, accounted for the shift in the data with which the model was trained and adapted the model to a new incoming set of data.

“With our method, it doesn’t matter if you don’t have a lot of training data,” Shoushtari said. “Our method enables to adapt deep learning models using a small set of training data, no matter what hospital, what machine or what body parts the images come from.

“What is significant about the domain adaptation strategy is that we can reduce the errors we fact in imaging due to a limited set of data,” Shoushtari said. "This could help us apply deep learning to problems that were previously deemed impossible due to the data requirements.”

One proposed use for this method would be in acquiring data from MRI, which requires patients to lie still for long periods of time. Any movement by the patient leads to errors.

“We have considered acquiring the data from the MRI in a shorter time,” Shoushtari said. “While shorter scans typically lead to lower quality images, our method can be used to computationally increase image quality as if the patient was in the machine for a longer time. The key innovation in our new approach is that it requires only tens of images to adapt an existing MRI model to new data.”

The method is also applicable beyond radiology, and the team is collaborating with other colleagues to adopt the method to scientific imaging, microscopic imaging and other applications in which data can be represented as an image.

Shoushtari S, Liu J, Chandler EP, Asif MS, Kamilov US. Prior Mismatch and Adaptation in PnP-ADMM with a Nonconvex Convergence Analysis. International Conference on Machine Learning, July 21-27, 2024. https://icml.cc/virtual/2024/poster/34765

The source code is available on GitHub: https://github.com/wustl-cig/MMPnPADMM

Funding for this research was provided in part by the National Science Foundation CAREER Award (CCF-2043134 and CCF-2046293).