Break it down: A new way to address common computing problem

A computational framework for solving linear inverse problems takes a parallel computing approach

In this era of big data, there are some problems in scientific computing that are so large, so complex and contain so much information that attempting to solve them would be too big of a task for most computers.

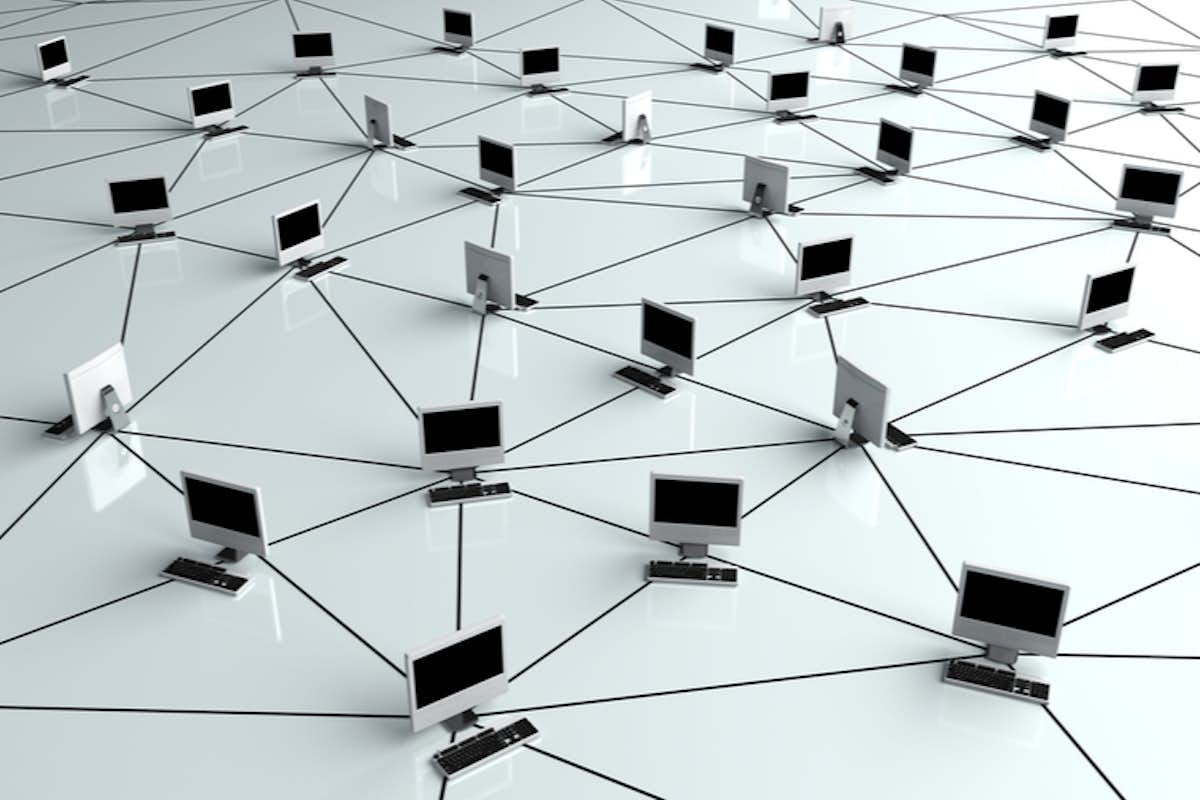

Now, researchers at the McKelvey School of Engineering at Washington University in St. Louis have developed a new algorithm for solving a common class of problem — known as linear inverse problems — by breaking them down into smaller tasks, each of which can be solved in parallel on standard computers.

The research, from the lab of Jr-Shin Li, professor in the Preston M. Green Department of Electrical & Systems Engineering, was published July 30 in the journal Scientific Reports.

In addition to providing a framework for solving this class of problems, the approach, called Parallel Residual Projection (PRP), also delivers enhanced security and mitigates privacy concerns.

Linear inverse problems are those that attempt to take observational data and try to find a model that describes it. In their simplest form, they may look familiar: 2x+y = 1, x-y = 3. Many a high school student has solved for x and y without the help of a supercomputer.

And as more researchers in different fields collect increasing amounts of data in order to gain deeper insights, these equations continue to grow in size and complexity.

“We developed a computational framework to solve for the case when there are thousands or millions of such equations and variables,” Li said.

This project was conceived while working on research problems from other fields involving big data. Li’s lab had been working with a biologist researching the network of neurons that deal with the sleep-wake cycle.

“In the context of network inference, looking at a network of neurons, the inverse problem looks like this,” said Vignesh Narayanan, a research associate in Li’s lab:

Given the data recorded from a bunch of neurons, what is the ‘model’ that describes how these neurons are connected with each other?

“In an earlier work from our lab, we showed that this inference problem can be formulated as a linear inverse problem,” Narayanan said.

If the system has a few hundred nodes — in this case, the nodes are the neurons — the matrix which describes the interaction among neurons could be millions by millions; that’s huge.

“Storing this matrix itself exceeds the memory of a common desktop,” said Wei Miao, a PhD student in Li’s lab.

Add to that the fact that such complex systems are often dynamic, as is our understanding of them. “Say we already have a solution, but now I want to consider interaction of some additional cells,” Miao said. Instead of starting a new problem and solving it from scratch, PRP adds flexibility and scalability. “You can manipulate the problem any way you want.”

Even if you do happen to have a supercomputer, Miao said, “There is still a chance that by breaking down the big problem, you can solve it faster.”

In addition to breaking down a complex problem and solving in parallel on different machines, the computational framework also, importantly, consolidates results and computes an accurate solution to the initial problem.

An unintentional benefit of PRP is enhanced data security and privacy. When credit card companies use algorithms to research fraud, or a hospital wants to analyze its massive database, “No one wants to give all of that access to one individual,” Narayanan said.

“This was an extra benefit that we didn’t even strive for,” Narayanan said.