Exploring the flatland of computer memory

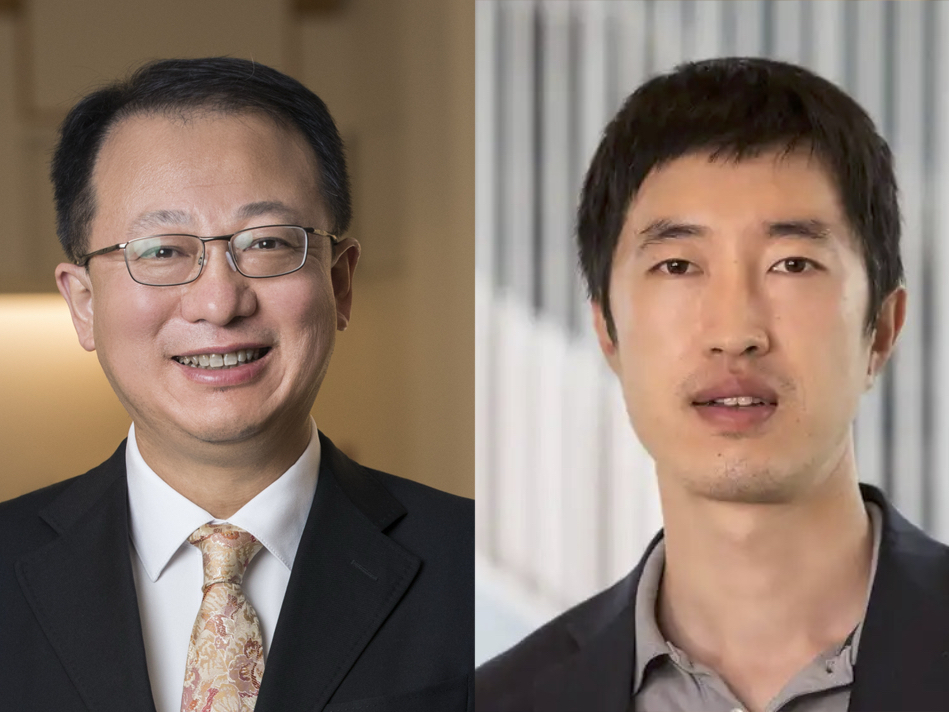

Kunal Agrawal collaborating to study properties of new computer memories

Computers have a hierarchy of memory, with the memory closest to the processor performing the fastest but storing the least amount of data. Improved technology is flattening out the hierarchy, but the current practical tools and theory to understand it are not designed for this “flatland” terrain.

Kunal Agrawal, associate professor of computer science in the McKelvey School of Engineering at Washington University in St. Louis, is joining a team of collaborators who seek to understand how the structure of memory is changing and develop new theoretical models and algorithms for the new subsystems with a four-year, $1.2 million grant from the National Science Foundation. She is collaborating with Michael Bender, professor of computer science at Stony Brook University; Martin Farach-Colton, professor of computer science at Rutgers University and lead investigator; and Jeremy Fineman, Provost's Distinguished Associate Professor and Wagner Term Chair in Computer Science at Georgetown University. Washington University’s portion of the grant is $299,997.

In this theoretical project, Agrawal and collaborators will investigate how to manage memory resources to ensure the best performance.

“Computers have many processors and cores that are all accessing this memory system, and they are fighting over the resources because some levels of the memory hierarchy are shared,” Agrawal said. “These cores could be running independent applications, the same parallel application or it could be a combination. We want to get the best performance that we can in all of these scenarios.”

“Parallelism is here to stay,” Agrawal said. “Now we have eight cores on a machine, but as we get more and more cores, this work becomes more relevant.”

While this is not a new field, it is more important now because of the changing properties of the different types of memory. The team will look at the new kinds of memory coming online: some that are fast, some that read fast but write slow, and some that can only do a certain number of writes before the memory is useless, Agrawal said.

The researchers also will look at streaming and semi-streaming applications for large data sets.

“Previously, streaming applications often used a one-pass algorithm where you read the data only once because it was too big,” she said. “Now, you can afford to do more than one read, and this makes a big difference in algorithm design.”

The team will build new theoretical models to help them learn how to use the memory to solve problems.